一、Overview

[Kaggle竞赛地址](ML2021Spring-hw2 | Kaggle)

竞赛内容:根据声音 frame 预测 phoneme 的类别(39分类问题)

训练数据格式:1229932 × 429,此处将前后五个frame连接在一起,可以更好地预测当前的类别

测试数据格式:451552 × 429

二、Strong Baseline

暂时没有过 StrongBaseline

训练集重采样

训练集类别之间数量差异极大,类别最高的数量可达178713,而最低的类别只有3883。在验证集上验证时这些低数据量的类别预测率普遍低于高数据量的样本,这里将每个类别数量都拓展到15000。修改后低数据量类别预测率显著提高,但总体预测率并未有明显提高,可能测试集中的低数据量类别的样本也同样少。

1

2

3

4

5

6

7

8

9

10

11

12

13

14train_class = []

class_idx = np.arange(train_x.shape[0])

for i in range(39):

train_class.append(class_idx[train_y == str(i)])

min_num = 15000

for i in range(39):

addition = min_num - vote[str(i)]

if addition <= 0:

continue

print(i, addition)

idx = np.random.choice(train_class[i], addition)

train_x = np.vstack([train_x, train_x[idx]])

train_y = np.append(train_y, train_y[idx])增大网络模型

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59class Classifier1(nn.Module):

def __init__(self):

super(Classifier1, self).__init__()

self.dropout = nn.Dropout(0.5)

self.layer1 = nn.Linear(429, 2048)

self.layer2 = nn.Linear(2048, 2048)

self.layer3 = nn.Linear(2048, 2048)

self.layer4 = nn.Linear(2048, 1024)

self.layer5 = nn.Linear(1024, 512)

self.layer6 = nn.Linear(512, 128)

self.BatchNorm0 = nn.BatchNorm1d(429)

self.BatchNorm1 = nn.BatchNorm1d(2048)

self.BatchNorm2 = nn.BatchNorm1d(2048)

self.BatchNorm3 = nn.BatchNorm1d(2048)

self.BatchNorm4 = nn.BatchNorm1d(1024)

self.BatchNorm5 = nn.BatchNorm1d(512)

self.BatchNorm6 = nn.BatchNorm1d(128)

self.out = nn.Linear(128, 39)

self.act_fn = nn.LeakyReLU()

def forward(self, x):

x = self.BatchNorm0(x)

x = self.layer1(x)

x = self.act_fn(x)

x = self.BatchNorm1(x)

x = self.dropout(x)

x = self.layer2(x)

x = self.act_fn(x)

x = self.BatchNorm2(x)

x = self.dropout(x)

x = self.layer3(x)

x = self.act_fn(x)

x = self.BatchNorm3(x)

x = self.dropout(x)

x = self.layer4(x)

x = self.act_fn(x)

x = self.BatchNorm4(x)

x = self.dropout(x)

x = self.layer5(x)

x = self.act_fn(x)

x = self.BatchNorm5(x)

x = self.dropout(x)

x = self.layer6(x)

x = self.act_fn(x)

x = self.BatchNorm6(x)

x = self.dropout(x)

x = self.out(x)

return x使用l2正则

1 | # l2正则即在loss中添加|w|^2,控制w的大小,求导后本质上等同于weight_decay |

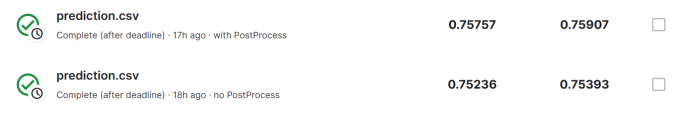

后处理(提升明显)

音频信号是连续的,而frame的长度只有25ms,因此一个单词往往会对应多个frame,这些frame都是连续的且类别相同。

1

2

3

4

5

6

7

8

9

10

11# 11 3 11 -> 11 11 11

count = 0

for i in range(1, len(predict)-1):

previous_ = predict[i-1]

next_ = predict[i+1]

current_ = predict[i]

if (previous_ != current_) and (next_ != current_) and (previous_ == next_):

predict[i] = previous_

count +=1

print('total number of correction %d, correction percent %.2f'% (count, count/len(predict)))

猜测要使用lstm,gru等语言类模型或训练多个模型进行ensemble